Introduction

Pedro Pathing is an advanced Reactive Vector Follower developed by FTC Team 10158 to revolutionize autonomous navigation in robotics. Unlike conventional pathing systems such as RoadRunner, Pedro Pathing leverages Bézier curve generation to produce smoother, faster, and more efficient trajectories. Its primary focus is on enhancing the adaptability of robots during autonomous operation by reacting dynamically to environmental changes, reducing error margins, and ensuring optimal path execution.

By incorporating Bézier curves, the system provides:

- Smoother Transitions: Ensuring less jerky motions for precision-intensive tasks.

- Faster Execution: Reducing time spent in path planning and execution.

- Dynamic Adjustments: Reacting to obstacles or changes in the environment in real-time.

Pedro Pathing is tailored for teams looking to push the boundaries of autonomous efficiency and accuracy. Whether you’re a seasoned team or just getting started with autonomous systems, this documentation will guide you through setting up, tuning, and implementing Pedro Pathing in your projects.

This documentation covers a variety of critical topics regarding Pedro Pathing, such as:

- Setup: Step-by-step instructions to integrate Pedro Pathing into your robot’s codebase.

- Localization Methods: Techniques for precise robot positioning, including odometry and sensor fusion approaches.

- Automatic Tuners: Simplified tuners to help optimize system parameters like PID gains and Bézier control points.

- PIDF Tuning: Comprehensive strategies for perfecting the reactive follower system.

- Code Examples: Practical examples for both autonomous and teleop modes.

- Autonomous Breakdown: A detailed breakdown on the example autonomous that uses Pedro Pathing.

- Common Issues: Solutions to common problems encountered while using Pedro Pathing.

However, this documentation also contains other, more general useful topics such as:

- ADB Setup: A tutorial on how to set up ADB for wireless pushing.

- PID Tutorial: A tutorial on how to tune PID loops for your robot.

- XML Configuration Tutorial: A tutorial on how to configure your robot using XML.

The sidebar provides quick access to each section, ensuring easy navigation through the documentation.

Our code is stored in our library.

This is the Official Pedro Pathing Quickstart.

There is a visualizer for Pedro Path Generation.

This website has been created with ❤️ by Baron and Aarsh!

You can reach us at:

We value community contributions to improve Pedro Pathing. If you have ideas, encounter issues, or wish to enhance this project, we encourage you to contribute:

- Click the Edit button at the top-right of the page.

- Submit an issue detailing your suggestion or problem.

- Ensure your issue is thoroughly described, so we can address it effectively.

Our team will review your input and get back to you as quickly as possible.

Prerequisites for Pedro Pathing

Robot and Drive Type

Pedro Pathing is designed to work with omnidirectional drives, such as mecanum drive. Currently, there is no support for swerve drives.

Project Setup

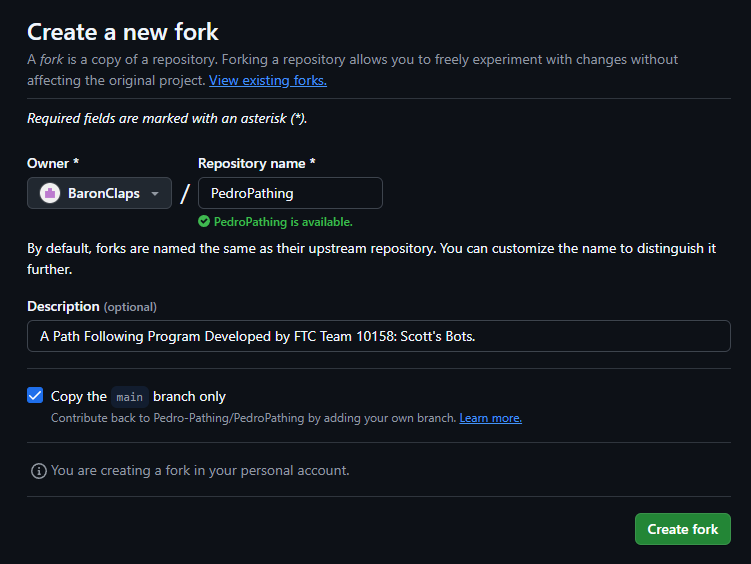

1. If you want to use the quickstart, clone it: https://github.com/Pedro-Pathing/Quickstart.git. Then move on to Constants Setup.

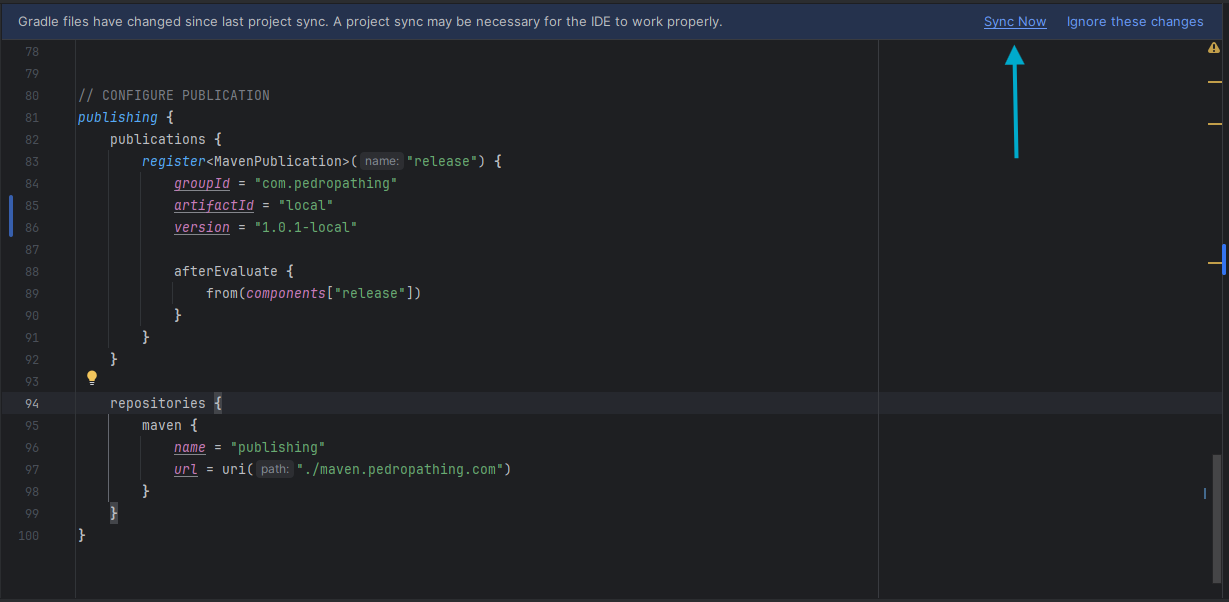

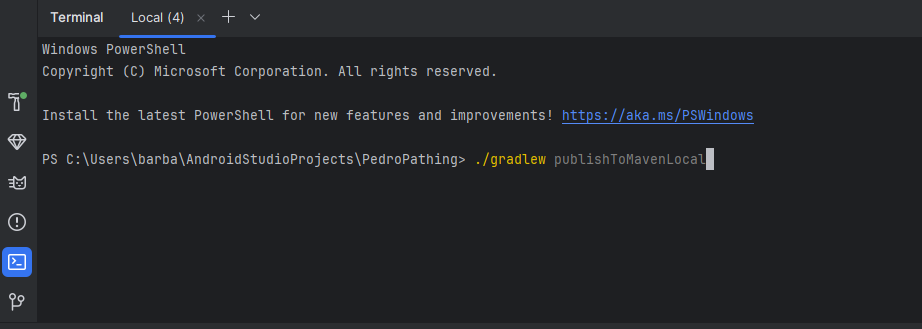

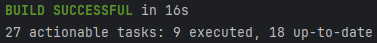

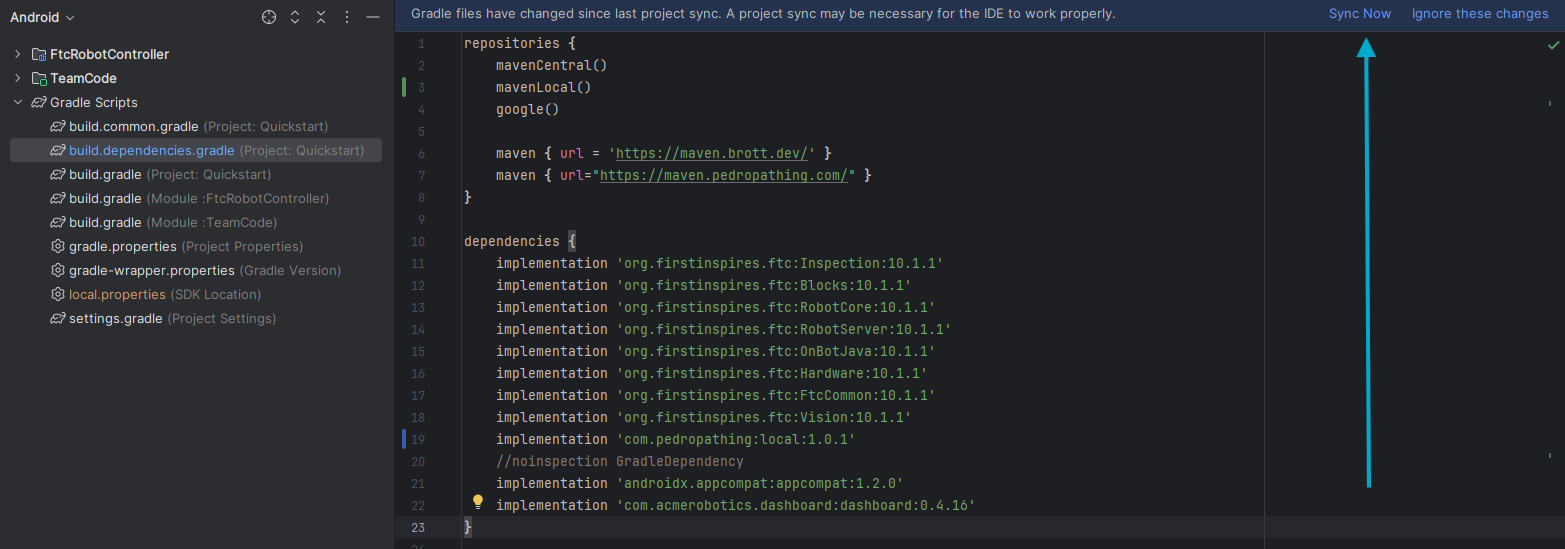

2. If you want to add Pedro to your existing repository, add these two to your repositories{} block in build.dependencies.gradle:

maven { url = 'https://maven.brott.dev/' }

maven { url= 'https://maven.pedropathing.com/' }

3. Then add these two to your dependencies{} block in build.dependencies.gradle:

implementation 'com.pedropathing:pedro:[VERSION]'

implementation 'com.acmerobotics.dashboard:dashboard:0.4.16'

4. Below, there is the latest version of Pedro, replace [VERSION] with it.

5. Lastly, you will have to create a PedroPathing package. Within this you will store the tuners and your two constants files. Download the tuners from the Pedro Pathing Quickstart. Place the tuners into your package and then continue to learn how to setup your constants files.

Constants Setup

Note: If you are not using the quickstart and not uses the names LConstants and FConstants , you will have to adapt the tuners by inputting your classes that have the static{} blocks into the PoseUpdater or into the Follower, depending on which one the tuner/test uses.

This is NOT recommended, as it is much easier to use the same class names as the quickstart.

In these docs, we will refer to the class that modifies the FollowerConstants as FConstants and the class that modifies the specific localizer's constants as LConstants.

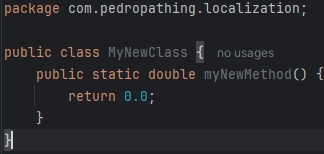

1. There are default values saved within the library, you override them by changing their values in the static {} block in, for example, LConstants or FConstants. These docs will

2. Create a Class that has a static block to modify our FollowerConstants class. This will be refered to as FConstants.

3. We need to make sure to select our localizer type from the Localizers enum.

// This acts as a method of updating FollowerConstants without direct access to it.

public FConstants { // This is how we change Follower Constants.

static {

// Select our localizer

FollowerConstants.localizers = Localizers.THREE_WHEEL;

// We can change the value of any variable/constant of FollowerConstants.

FollowerConstants.mass = 10; // In kg

}

}

4. Then, we need to create another class with a static block to modify our specific localizer's constants. This will be refered to as LConstants.

5. We need to change the values for the localizer. In each localizer's page, there will be a set of default values that you can copy. These are the default values provided in the quickstart:

// This acts as a method of updating ThreeWheelConstants without direct access to it.

public LConstants { // This is how we change ThreeWheelConstants.

static {

ThreeWheelConstants.forwardTicksToInches = .001989436789;

ThreeWheelConstants.strafeTicksToInches = .001989436789;

ThreeWheelConstants.turnTicksToInches = .001989436789;

ThreeWheelConstants.leftY = 1;

ThreeWheelConstants.rightY = -1;

ThreeWheelConstants.strafeX = -2.5;

ThreeWheelConstants.leftEncoder_HardwareMapName = "leftFront";

ThreeWheelConstants.rightEncoder_HardwareMapName = "rightRear";

ThreeWheelConstants.strafeEncoder_HardwareMapName = "rightFront";

ThreeWheelConstants.leftEncoderDirection = Encoder.REVERSE;

ThreeWheelConstants.rightEncoderDirection = Encoder.REVERSE;

ThreeWheelConstants.strafeEncoderDirection = Encoder.FORWARD;

}

}

6. Make sure when you are creating opmodes to follow the guidelines that Constants sets down.

Migrating from the Old Quickstart

Follow all of the steps in the Prerequisites page to setup your new project.

- To start, go to the

LConstantsclass and copy the values from your localizer's default values from its specific page in these docs. - Then, paste them into the

static{}block of theLConstantsclass in the new Quickstart. - Now, open your Localizer in your previous project / repository and copy the values from the localizer, and modify the default values to match your values.

- Next, open the

FConstantsclass. - Set the Localizer to the correct value by setting

FollowerConstants.localizers = Localizers.[LOCALIZER];where[LOCALIZER]is the localizer you are using. - Open your previous project / repository and copy the MODIFIED values from the

FollowerConstantsclass. - Modify the lines in the

static{}block of theFConstantsclass in the new Quickstart to match the values you had before. - If you have any other values that you have changed in the

FollowerConstantsclass, that are not covered in the quickstart, copy them over as well by creating a new line in thestatic{}block of theFConstantsclass. Then doFollowerConstants.[VARIABLE] = [VALUE];, where[VARIABLE]is the variable you want to change from the default and where[VALUE]is the value you want to set it to. - Make sure to update your opmodes to follow the guidelines that Constants sets down.

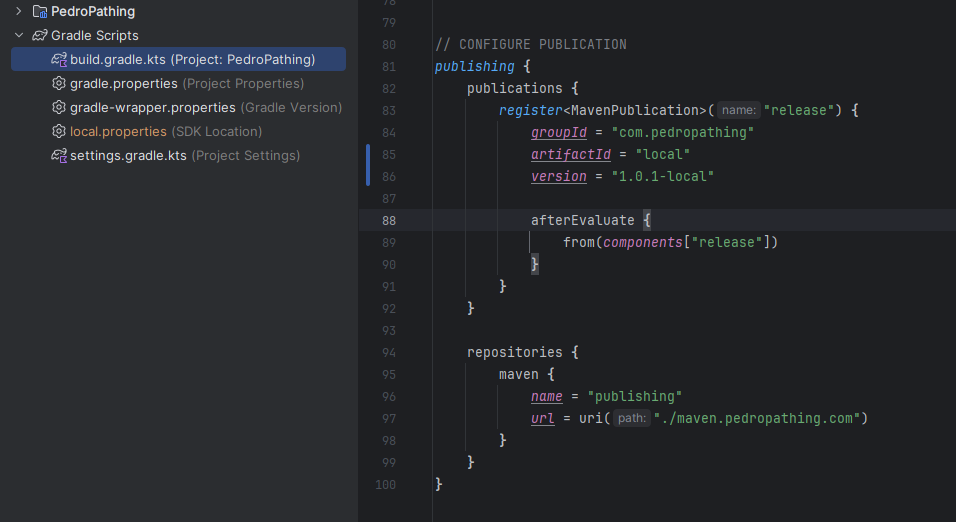

Note: You can locally change the library by following the steps in the Local Changes to the Library page.

Though, changes such as disabling bulk reading are not needed (Pedro now doesn't bulk read) and a fix for different breaking behaviors (between driving forward and backward) is being tested.

Pick your Localizer

These are the current localizers supported:

- Drive Encoder Localizer

- Two-Wheel Localizer

- Two-Wheel + Pinpoint IMU Localizer

- Three-Wheel Localizer

- Three-Wheel + IMU Localizer

- OTOS Localizer

- Pinpoint Localizer

- RR 0.5 to Pedro Localizer

The localizers use a pose exponential method of localization, a way of turning movements from the robot's coordinate frame to the global coordinate frame.

However, the OTOS localizer uses its own onboard system for calculating localization, which we do not know about.

Setup your Localizer

Define your localizer

- Go to your class with a

static{}block, where you will be changing your FollowerConstants (In the Quickstart, this class is calledFConstants). - Once there, type this, where [LOCALIZER] is your localizer of choice:

FollowerConstants.localizers = Localizers.[LOCALIZER];

- The default is THREE_WHEEL, you will not have to do this step if you are using three wheel, as it is the default.

Define your motor names and directions

- Navigate to

FConstantsand into thestatic{}block - Modify your hardware map names for your motors and the directions of your motors. The defaults are:

FollowerConstants.leftFrontMotorName = "leftFront";

FollowerConstants.leftRearMotorName = "leftRear";

FollowerConstants.rightFrontMotorName = "rightFront";

FollowerConstants.rightRearMotorName = "rightRear";

FollowerConstants.leftFrontMotorDirection = DcMotorSimple.Direction.REVERSE;

FollowerConstants.leftRearMotorDirection = DcMotorSimple.Direction.REVERSE;

FollowerConstants.rightFrontMotorDirection = DcMotorSimple.Direction.FORWARD;

FollowerConstants.rightRearMotorDirection = DcMotorSimple.Direction.FORWARD;

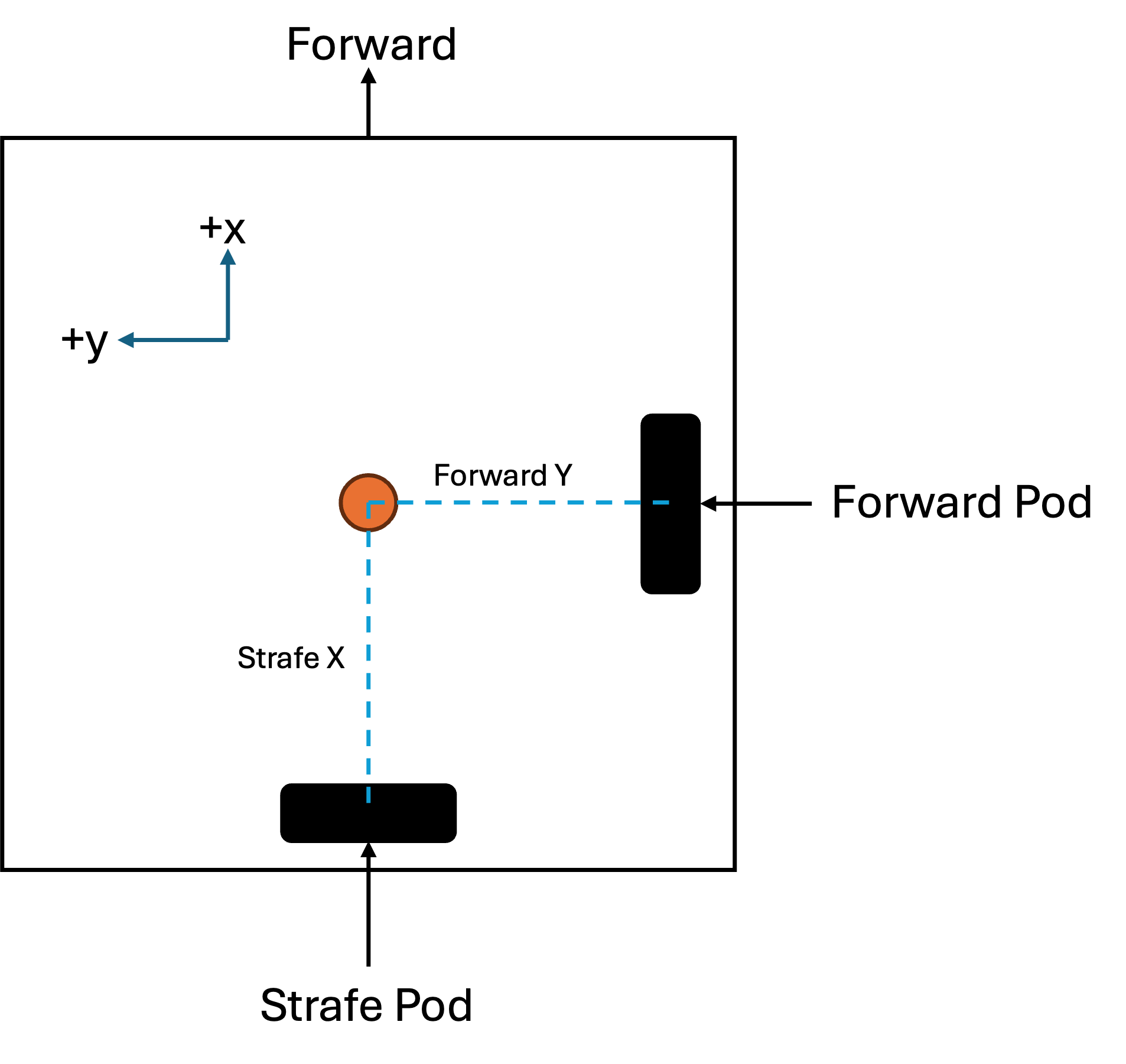

Robot Coordinate Grid

Use this diagram to find the offset of your localizer. This view is from the top of the robot looking downwards

Access

To begin setting up your localizer, go to your class with a static{} block, where you will be changing your Localizer's constants (In the Quickstart, this class is called LConstants).

From there, continue the steps for your localizer in the linked pages:

- Drive Encoder Localizer

- Two Wheel Localizer

- Three Wheel Localizer

- Three Wheel + IMU Localizer

- OTOS Localizer

- Pinpoint Localizer

Setting Up Your Drive Encoder Localizer

Prerequisites

- Encoders attached to all drive motors.

Default Values

These are the default values of the DriveEncoderConstants. You can copy and paste this into your static{} block within LConstants:

DriveEncoderConstants.forwardTicksToInches = 1;

DriveEncoderConstants.strafeTicksToInches = 1;

DriveEncoderConstants.turnTicksToInches = 1;

DriveEncoderConstants.robot_Width = 1;

DriveEncoderConstants.robot_Length = 1;

DriveEncoderConstants.leftFrontEncoderDirection = Encoder.REVERSE;

DriveEncoderConstants.rightFrontEncoderDirection = Encoder.FORWARD;

DriveEncoderConstants.leftRearEncoderDirection = Encoder.REVERSE;

DriveEncoderConstants.rightRearEncoderDirection = Encoder.FORWARD;

Steps

1. Encoder Setup

Open the file LConstants. The motor names are already defined in FConstants, so you don't need to modify them here.

2. Robot Width and Length

Measure your robot's wheelbase in inches, the length - the distance from the forward and back wheels - and width - the distance between the left and right wheels.

Set DriveEncoderConstants.robot_Width and DriveEncoderConstants.robot_Length to the values you found above.

3. Encoder Direction Calibration

- Ensure all encoders tick up when the robot moves forward. Reverse the direction of any encoders that don't follow this convention.

You can do this by changing the Encoder.FORWARD and Encoder.REVERSE values for each motor in the LConstants file.

4. Localizer Tuning

We need to adjust multipliers that convert encoder ticks into real-world measurements (inches or radians). This ensures your localizer's readings are accurate.

a) Forward Localizer Tuner

-

Position a ruler alongside your robot.

-

Push the robot forward by the desired distance (default is 48 inches).

-

The tuner will display two numbers:

-

First number: Distance the robot thinks it has traveled.

-

Second number (multiplier)

-

-

(Optional) Run multiple tests and average the multipliers for better accuracy.

-

Input this value in

LConstantsasDriveEncoderConstants.forwardTicksToInches = [multiplier], where[multiplier]is the value you obtained from the tuner.

b) Lateral Localizer Tuner

-

Position a ruler alongside your robot.

-

Push the robot sideways (strafing) by the desired distance (default is 48 inches).

-

The tuner will display two numbers:

-

First number: Distance the robot thinks it has traveled laterally.

-

Second number (multiplier)

-

-

(Optional) Run multiple tests and average the multipliers for better accuracy.

-

Input this value in

LConstantsasDriveEncoderConstants.strafeTicksToInches = [multiplier], where[multiplier]is the value you obtained from the tuner.

c) Turn Localizer Tuner

-

Position your robot facing a recognizable landmark, like a field tile edge.

-

Spin the robot counterclockwise for one full rotation (or your desired angle).

-

The tuner will display two numbers:

-

First number: Distance the robot thinks it has spun.

-

Second number (multiplier)

-

-

(Optional) Run multiple tests and average the multipliers for better accuracy.

-

Input this value in

LConstantsasDriveEncoderConstants.turnTicksToInches = [multiplier], where[multiplier]is the value you obtained from the tuner.

Testing Your Localizer

After completing the tuning steps, you can test your localizer's accuracy.

-

Go to

Localization Testand drive your robot around. -

Open the FTC Dashboard at http://192.168.43.1:8080/dash.

-

Switch the view to "field view" from the top right corner dropdown.

-

The dashboard should display the robot's position on the field.

-

Observe the movements, moving the robot forward should make

xincrease and strafing left should makeyincrease.

Congratulations on successfully tuning your localizer!

Setting Up Your Two Wheel Localizer

Prerequisites

- Two odometry wheels connected to motor encoder ports on a hub.

- A properly configured IMU.

Default Values

These are the default values of the TwoWheelConstants. You can copy and paste this into your static{} block within LConstants:

TwoWheelConstants.forwardTicksToInches = .001989436789;

TwoWheelConstants.strafeTicksToInches = .001989436789;

TwoWheelConstants.forwardY = 1;

TwoWheelConstants.strafeX = -2.5;

TwoWheelConstants.forwardEncoder_HardwareMapName = "leftFront";

TwoWheelConstants.strafeEncoder_HardwareMapName = "rightRear";

TwoWheelConstants.forwardEncoderDirection = Encoder.REVERSE;

TwoWheelConstants.strafeEncoderDirection = Encoder.FORWARD;

TwoWheelConstants.IMU_HardwareMapName = "imu";

TwoWheelConstants.IMU_Orientation = new RevHubOrientationOnRobot(RevHubOrientationOnRobot.LogoFacingDirection.UP, RevHubOrientationOnRobot.UsbFacingDirection.LEFT);

Steps

1. Odometry Wheel Setup

Open the file LConstants and navigate to your static{} block. Configure the following:

- Encoder Ports:

- Replace the

TwoWheelConstants.forwardEncoder_HardwareMapNameandTwoWheelConstants.strafeEncoder_HardwareMapNamewith the names of the ports connected to your encoders. - The names will match the hardware map names of the motor port that they are connected to.

- Replace the

- Odometry Measurements:

- Input the

TwoWheelConstants.forwardYandTwoWheelConstants.strafeXvalues. - These values represent the distance of the odometry wheels from the robot's center of rotation on the robot coordinate grid.

- Input the

- Encoder Directions:

- The Encoder Directions can be changed by changing the

Encodervalues forTwoWheelConstants.forwardEncoderDirectionorTwoWheelConstants.strafeEncoderDirection. - Run the

Localization Testand observe the encoder values - If the x value ticks down when the robot moves forward, reverse the direction of the forward pod.

- If the y value ticks down when the robot moves left, reverse the direction of the strafe pod.

- The Encoder Directions can be changed by changing the

- IMU Setup:

- Replace the

TwoWheelConstants.IMU_HardwareMapNamewith the name of the configuration for your IMU. - Make sure that if you are using the built-in IMU, you have it configured to port 0 on the control hub.

- Define the orientation of the IMU on the robot by changing the

RevHubOrientationOnRobotvalue forTwoWheelConstants.IMU_Orientation.

- Replace the

2. Localizer Tuning

We need to adjust multipliers that convert encoder ticks into real-world measurements (inches or radians). This ensures your localizer's readings are accurate.

a) Forward Localizer Tuner

-

Position a ruler alongside your robot.

-

Push the robot forward by the desired distance (default is 48 inches).

-

The tuner will display two numbers:

-

First number: Distance the robot thinks it has traveled.

-

Second number (multiplier)

-

-

(Optional) Run multiple tests and average the multipliers for better accuracy.

-

Input this value in

LConstantsasTwoWheelConstants.forwardTicksToInches = [multiplier], where[multiplier]is the value you obtained from the tuner.

b) Lateral Localizer Tuner

-

Position a ruler alongside your robot.

-

Push the robot sideways (strafing) by the desired distance (default is 48 inches).

-

The tuner will display two numbers:

-

First number: Distance the robot thinks it has traveled laterally.

-

Second number (multiplier)

-

-

(Optional) Run multiple tests and average the multipliers for better accuracy.

-

Input this value in

LConstantsasTwoWheelConstants.strafeTicksToInches = [multiplier], where[multiplier]is the value you obtained from the tuner.

Testing Your Localizer

After completing the tuning steps, you can test your localizer's accuracy.

-

Go to

Localization Testand drive your robot around. -

Open the FTC Dashboard at http://192.168.43.1:8080/dash.

-

Switch the view to "field view" from the top right corner dropdown.

-

The dashboard should display the robot's position on the field.

-

Observe the movements, moving the robot forward should make

xincrease and strafing left should makeyincrease.

Congratulations on successfully tuning your localizer!

Setting Up Your Three Wheel Localizer

Prerequisites

- Three odometry wheels connected to motor encoder ports on a hub.

Default Values

These are the default values of the ThreeWheelConstants. You can copy and paste this into your static{} block within LConstants:

ThreeWheelConstants.forwardTicksToInches = .001989436789;

ThreeWheelConstants.strafeTicksToInches = .001989436789;

ThreeWheelConstants.turnTicksToInches = .001989436789;

ThreeWheelConstants.leftY = 1;

ThreeWheelConstants.rightY = -1;

ThreeWheelConstants.strafeX = -2.5;

ThreeWheelConstants.leftEncoder_HardwareMapName = "leftFront";

ThreeWheelConstants.rightEncoder_HardwareMapName = "rightRear";

ThreeWheelConstants.strafeEncoder_HardwareMapName = "rightFront";

ThreeWheelConstants.leftEncoderDirection = Encoder.REVERSE;

ThreeWheelConstants.rightEncoderDirection = Encoder.REVERSE;

ThreeWheelConstants.strafeEncoderDirection = Encoder.FORWARD;

Steps

1. Odometry Wheel Setup

Open the file LConstants and navigate to your static{} block. Configure the following:

- Encoder Ports:

- Replace the

ThreeWheelConstants.leftEncoder_HardwareMapName,ThreeWheelConstants.rightEncoder_HardwareMapName, andThreeWheelConstants.strafeEncoder_HardwareMapNamewith the names of the ports connected to your encoders. - The names will match the hardware map names of the motor port that they are connected to.

- Replace the

- Odometry Measurements:

- Input the

ThreeWheelConstants.leftY,ThreeWheelConstants.rightY, andThreeWheelConstants.strafeXvalues. - These values represent the distance of the odometry wheels from the robot's center of rotation on the robot coordinate grid.

- Input the

- Encoder Directions:

- The Encoder Directions can be changed by changing the

Encodervalues forThreeWheelConstants.leftEncoderDirection,ThreeWheelConstants.rightEncoderDirection, orThreeWheelConstants.strafeEncoderDirection. - Run the

Localization Testand observe the encoder values - If the x value ticks down when the robot moves forward, reverse the direction of both of the parallel pods (left and right).

- If the x value stays relatively constant when the robot drives forward, that means that one of the parallel pods (left and right) need to be reversed.

- If the y value ticks down when the robot strafe left, reverse the direction of the strafe pod.

- The Encoder Directions can be changed by changing the

2. Localizer Tuning

We need to adjust multipliers that convert encoder ticks into real-world measurements (inches or radians). This ensures your localizer's readings are accurate.

a) Forward Localizer Tuner

-

Position a ruler alongside your robot.

-

Push the robot forward by the desired distance (default is 48 inches).

-

The tuner will display two numbers:

-

First number: Distance the robot thinks it has traveled.

-

Second number (multiplier)

-

-

(Optional) Run multiple tests and average the multipliers for better accuracy.

-

Input this value in

LConstantsasThreeWheelConstants.forwardTicksToInches = [multiplier], where[multiplier]is the value you obtained from the tuner.

b) Lateral Localizer Tuner

-

Position a ruler alongside your robot.

-

Push the robot sideways (strafing) by the desired distance (default is 48 inches).

-

The tuner will display two numbers:

-

First number: Distance the robot thinks it has traveled laterally.

-

Second number (multiplier)

-

-

(Optional) Run multiple tests and average the multipliers for better accuracy.

-

Input this value in

LConstantsasThreeWheelConstants.strafeTicksToInches = [multiplier], where[multiplier]is the value you obtained from the tuner.

c) Turn Localizer Tuner

-

Position your robot facing a recognizable landmark, like a field tile edge.

-

Spin the robot counterclockwise for one full rotation (or your desired angle).

-

The tuner will display two numbers:

-

First number: Distance the robot thinks it has spun.

-

Second number (multiplier)

-

-

(Optional) Run multiple tests and average the multipliers for better accuracy.

-

Input this value in

LConstantsasThreeWheelConstants.turnTicksToInches = [multiplier], where[multiplier]is the value you obtained from the tuner.

Congratulations on successfully tuning your localizer!

Setting Up Your Three Wheel IMU Localizer

Prerequisites

- Three odometry wheels connected to motor encoder ports on a hub.

- A properly configured IMU.

Default Values

These are the default values of the ThreeWheelIMUConstants. You can copy and paste this into your static{} block within LConstants:

ThreeWheelIMUConstants.forwardTicksToInches = .001989436789;

ThreeWheelIMUConstants.strafeTicksToInches = .001989436789;

ThreeWheelIMUConstants.turnTicksToInches = .001989436789;

ThreeWheelIMUConstants.leftY = 1;

ThreeWheelIMUConstants.rightY = -1;

ThreeWheelIMUConstants.strafeX = -2.5;

ThreeWheelIMUConstants.leftEncoder_HardwareMapName = "leftFront";

ThreeWheelIMUConstants.rightEncoder_HardwareMapName = "rightRear";

ThreeWheelIMUConstants.strafeEncoder_HardwareMapName = "rightFront";

ThreeWheelIMUConstants.leftEncoderDirection = Encoder.REVERSE;

ThreeWheelIMUConstants.rightEncoderDirection = Encoder.REVERSE;

ThreeWheelIMUConstants.strafeEncoderDirection = Encoder.FORWARD;

ThreeWheelIMUConstants.IMU_HardwareMapName = "imu";

ThreeWheelIMUConstants.IMU_Orientation = new RevHubOrientationOnRobot(RevHubOrientationOnRobot.LogoFacingDirection.UP, RevHubOrientationOnRobot.UsbFacingDirection.LEFT);

Steps

1. Odometry Wheel Setup

Open the file LConstants and navigate to your static{} block. Configure the following:

- Encoder Ports:

- Replace the

ThreeWheelIMUConstants.leftEncoder_HardwareMapName,ThreeWheelIMUConstants.rightEncoder_HardwareMapName, andThreeWheelIMUConstants.strafeEncoder_HardwareMapNamewith the names of the ports connected to your encoders. - The names will match the hardware map names of the motor port that they are connected to.

- Replace the

- Odometry Measurements:

- Input the

ThreeWheelIMUConstants.leftY,ThreeWheelIMUConstants.rightY, andThreeWheelIMUConstants.strafeXvalues. - These values represent the distance of the odometry wheels from the robot's center of rotation on the robot coordinate grid.

- Input the

- Encoder Directions:

- The Encoder Directions can be changed by changing the

Encodervalues forThreeWheelIMUConstants.leftEncoderDirection,ThreeWheelIMUConstants.rightEncoderDirection, orThreeWheelIMUConstants.strafeEncoderDirection. - Run the

Localization Testand observe the encoder values - If the x value ticks down when the robot moves forward, reverse the direction of both of the parallel pods (left and right).

- If the x value stays relatively constant when the robot drives forward, that means that one of the parallel pods (left and right) need to be reversed.

- If the y value ticks down when the robot strafe left, reverse the direction of the strafe pod.

- The Encoder Directions can be changed by changing the

- IMU Setup:

- Replace the

ThreeWheelIMUConstants.IMU_HardwareMapNamewith the name of the configuration for your IMU. - Make sure that if you are using the built-in IMU, you have it configured to port 0 on the control hub.

- Define the orientation of the IMU on the robot by changing the

RevHubOrientationOnRobotvalue forThreeWheelIMUConstants.IMU_Orientation.

- Replace the

2. Localizer Tuning

We need to adjust multipliers that convert encoder ticks into real-world measurements (inches or radians). This ensures your localizer's readings are accurate.

a) Forward Localizer Tuner

-

Position a ruler alongside your robot.

-

Push the robot forward by the desired distance (default is 48 inches).

-

The tuner will display two numbers:

-

First number: Distance the robot thinks it has traveled.

-

Second number (multiplier)

-

-

(Optional) Run multiple tests and average the multipliers for better accuracy.

-

Input this value in

LConstantsasThreeWheelIMUConstants.forwardTicksToInches = [multiplier], where[multiplier]is the value you obtained from the tuner.

b) Lateral Localizer Tuner

-

Position a ruler alongside your robot.

-

Push the robot sideways (strafing) by the desired distance (default is 48 inches).

-

The tuner will display two numbers:

-

First number: Distance the robot thinks it has traveled laterally.

-

Second number (multiplier)

-

-

(Optional) Run multiple tests and average the multipliers for better accuracy.

-

Input this value in

LConstantsasThreeWheelIMUConstants.strafeTicksToInches = [multiplier], where[multiplier]is the value you obtained from the tuner.

c) Turn Localizer Tuner

-

Position your robot facing a recognizable landmark, like a field tile edge.

-

Spin the robot counterclockwise for one full rotation (or your desired angle).

-

The tuner will display two numbers:

-

First number: Distance the robot thinks it has spun.

-

Second number (multiplier)

-

-

(Optional) Run multiple tests and average the multipliers for better accuracy.

-

Input this value in

LConstantsasThreeWheelIMUConstants.turnTicksToInches = [multiplier], where[multiplier]is the value you obtained from the tuner.

Testing Your Localizer

After completing the tuning steps, you can test your localizer's accuracy.

-

Go to

Localization Testand drive your robot around. -

Open the FTC Dashboard at http://192.168.43.1:8080/dash.

-

Switch the view to "field view" from the top right corner dropdown.

-

The dashboard should display the robot's position on the field.

-

Observe the movements, moving the robot forward should make

xincrease and strafing left should makeyincrease.

Note on ESD

If your robot seems to:

- Turn to face a different direction when starting a path

- Actively turning to a fully incorrect angle (or miss with a large untunable/unfixable error)

Your robot's IMU may have interference caused by ESD (electrostatic discharge).

Consider grounding the robot with a grounding strap and reading this guide from FIRST to understand ESD more.

If after all of this you cannot fix the issue, switch to the non-IMU ThreeWheel localizer, as it will not be as harshly affected by ESD and have more accuracy (compared to an interfered IMU).

Congratulations on successfully tuning your localizer!

Setting Up Your OTOS Localizer

Prerequisites

- Ensure the OTOS sensor is connected to an I2C port on your control hub.

- Verify the protective film on the sensor is removed before use.

Default Values

These are the default values of the OTOSConstants. You can copy and paste this into your static{} block within LConstants:

OTOSConstants.useCorrectedOTOSClass = false;

OTOSConstants.hardwareMapName = "sensor_otos";

OTOSConstants.linearUnit = DistanceUnit.INCH;

OTOSConstants.angleUnit = AngleUnit.RADIANS;

OTOSConstants.offset = new SparkFunOTOS.Pose2D(0, 0, Math.PI / 2);

OTOSConstants.linearScalar = 1.0;

OTOSConstants.angularScalar = 1.0;

Steps

1. Setup

- Open the file

LConstantsand navigate to yourstatic{}block. - Ensure the OTOS sensor is connected to an I2C port on your control hub (not port 0, which is reserved for the built-in imu).

- If you are using the corrected OTOS class, set

OTOSConstants.useCorrectedOTOSClasstotrue. - If you are using the original OTOS class, set

OTOSConstants.useCorrectedOTOSClasstofalse. - Make sure that the I2C port that you are using is the same class as the one you are using, determined by above.

- If you are using the corrected OTOS class, set

- Modify or add your hardware map name for the OTOS sensor:

- Add the port name connected to your OTOS sensor by changing the value of

OTOSConstants.hardwareMapName. - The default value is

"sensor_otos".

- Add the port name connected to your OTOS sensor by changing the value of

- Define the sensor's position relative to the center of the robot:

- Measure the X, Y coordinates (in inches) and then change the value of

OTOSConstants.offset. -

- The default value is

new SparkFunOTOS.Pose2D(0, 0, Math.PI / 2).

- The default value is

- Left/right is the y axis and forward/backward is the x axis, with left being positive y and forward being positive x.

- PI/2 radians is facing forward, and clockwise rotation is negative rotation.

- Measure the X, Y coordinates (in inches) and then change the value of

- If you want to change the units for your linear and angular measurements, you can do so by changing

OTOSConstants.linearUnitandOTOSConstants.angleUnitin thestatic{}block ofLConstants.- The default linear unit is

DistanceUnit.INCHand the default angle unit isAngleUnit.RADIANS.

- The default linear unit is

2. Localizer Tuning

a) Linear Scalar (Forward or Lateral Tuning)

Since OTOS has only one linear scalar, you can run either Forward or Lateral Localizer Tuner. The result should be very similar, but not the same as there will be very small, negligible differences.

Option 1: Forward Tuning

- Align a ruler alongside your robot.

- Push the robot forward by the default distance (48 inches) or a custom set value.

- Observe the tuner outputs:

- First number: The distance the robot estimates it has moved.

- Second number: The linear scalar to update.

Option 2: Lateral Tuning

-

Align a ruler alongside your robot.

-

Push the robot 48 inches laterally to the right (default distance) or a custom set value.

-

Observe the tuner outputs:

- First number: The distance the robot estimates it has moved.

- Second number: The linear scalar to update.

-

Add the linear scalar value in

LConstantsin the static block:- On a new line, add (or modify if using the quickstart)

OTOSConstants.linearScalar = [LINEARSCALAR], where [LINEARSCALAR] is the outputted value. - By replacing in

- On a new line, add (or modify if using the quickstart)

-

(Optional): Run multiple tests and average the scalars for better accuracy.

b) Angular Scalar (Turn Tuning)

- Place your robot, so it faces a fixed, recognizable reference point (e.g., aligning an edge with a field tile).

- Run the Turn Localizer Tuner:

- By default, rotate the robot counterclockwise one full rotation.

- Alternatively, you can set a custom angle in the tuner.

- After the rotation:

- First number: The distance the robot estimates it as rotated.

- Second number: The angular scalar you need to input.

- Replace the angular scalar value in

OTOSLocalizer.java(line 78) with the new scalar:- Ensure you replace the value, not add to or multiply the existing one.

- (Optional): Run multiple tests to average the scalars for improved accuracy.

Testing Your Localizer

After completing the tuning steps, you can test your localizer's accuracy.

-

Go to

Localization Testand drive your robot around. -

Open the FTC Dashboard at http://192.168.43.1:8080/dash.

-

Switch the view to "field view" from the top right corner dropdown.

-

The dashboard should display the robot's position on the field.

-

Observe the movements, moving the robot forward should make

xincrease and strafing left should makeyincrease.

Congratulations!

You have successfully tuned your OTOS Localizer. If you encounter any issues, revisit the steps above or reach out for support.

Setting Up Your Pinpoint Localizer

Prerequisites

- Pinpoint module connected to an I2C port.

- Dead wheel encoder wires properly connected to the Pinpoint module.

Default Values

These are the default values of the PinpointConstants. You can copy and paste this into your static{} block within LConstants:

PinpointConstants.forwardY = 1;

PinpointConstants.strafeX = -2.5;

PinpointConstants.distanceUnit = DistanceUnit.INCH;

PinpointConstants.hardwareMapName = "pinpoint";

PinpointConstants.useYawScalar = false;

PinpointConstants.yawScalar = 1.0;

PinpointConstants.useCustomEncoderResolution = false;

PinpointConstants.encoderResolution = GoBildaPinpointDriver.GoBildaOdometryPods.goBILDA_4_BAR_POD;

PinpointConstants.customEncoderResolution = 13.26291192;

PinpointConstants.forwardEncoderDirection = GoBildaPinpointDriver.EncoderDirection.REVERSED;

PinpointConstants.strafeEncoderDirection = GoBildaPinpointDriver.EncoderDirection.FORWARD;

Steps

1. Setup

Navigate to the file LConstants and into the static{} block and configure the following:

- Pinpoint Port:

- Set the

PinpointConstants.hardwareMapNamevariable to the name of the I2C port connected to the Pinpoint module. - Make sure that the I2C port is not the Control Hub built-in IMU port (port 0).

- Set the

- Odometry Measurements:

- Input the

forwardYandstrafeXvalues.- These values represent the distance of the odometry pods from the robot's center of rotation on the PEDRO robot coordinate grid.

- Do NOT use GoBilda's diagram, but Pedro's, linked above.

- The default values are

1forforwardYand-2.5forstrafeX. - The default distance measurement is in inches, which can be changed by modifying the

Pinpoint.distanceUnitvariable.

- Input the

- Encoder Resolution:

- By default, the encoder resolution is set to

GoBildaPinpointDriver.GoBildaOdometryPods.goBILDA_4_BAR_POD. - If you are using a custom encoder resolution, set

PinpointConstants.useCustomEncoderResolutiontotrueand input the custom resolution in thePinpointConstants.customEncoderResolutionvariable.

- By default, the encoder resolution is set to

- Encoder Directions:

- Ensure the encoder directions are correctly set. You can test this by running the

LocalizationTestTeleOp Opmode.- If the

xvalue ticks down when the robot moves forward, reverse the direction by changing theGoBildaPinpointDriver.EncoderDirectionvalues forPinpointConstants.forwardEncoderDirection - If the

yvalue ticks down when the robot moves left, reverse the direction by changing theGoBildaPinpointDriver.EncoderDirectionvalues forPinpointConstans.strafeEncoderDirection.

- If the

- The default values are

GoBildaPinpointDriver.EncoderDirection.REVERSEDforforwardEncoderDirectionandGoBildaPinpointDriver.EncoderDirection.FORWARDforstrafeEncoderDirection.

- Ensure the encoder directions are correctly set. You can test this by running the

- Yaw Scalar:

- If you want to use a yaw scalar, set

PinpointConstants.useYawScalartotrueand input the scalar value in thePinpointConstants.yawScalarvariable. - Note: The Yaw Scalar overrides the calibration done by GoBilda. It is recommended to use leave

PinpointConstants.useYawScalarasfalseunless you have a specific reason to change it.

- If you want to use a yaw scalar, set

2. Verifying Pinpoint Connection

Run the SensorGoBildaPinpointExample.java file located in the tuners folder. This will ensure the Pinpoint is correctly connected and operational.

Testing Your Localizer

After completing the tuning steps, you can test your localizer's accuracy.

-

Go to

Localization Testand drive your robot around. -

Open the FTC Dashboard at http://192.168.43.1:8080/dash.

-

Switch the view to "field view" from the top right corner dropdown.

-

The dashboard should display the robot's position on the field.

-

Observe the movements, moving the robot forward should make

xincrease and strafing left should makeyincrease.

Congratulations on successfully setting up your localizer!

Prerequisites for Automatic Tuners and PID Tuning

Localizer

You will need a tuned localizer to use Pedro Pathing effectively.

Important: Before tuning Pedro Pathing, ensure your localizer is properly tuned. Check out the localization section if you need to do this.

Follower Constants Value

Before running the automatic tuners or the PID tests, you must update your FConstants, using the method explained in the general prerequisites.

Make sure you have these variables all defined if they are different from the defaults:

- The Localizer you will be using (setup in Localization Setup)

- Motor Configuration Names (setup in Localization Setup)

- Motor Directions (setup in Localization Setup)

Mass of your Robot

- You will need to weigh the mass of your robot.

- Convert your mass into kilograms.

- Navigate to

FConstantsand into thestatic{}block. - Input your mass onto a new line (or modify the existing line) by adding

FollowerConstants.mass = [MASS];, where mass is the mass you determined above.

Velocity Tuners

You will first run two velocity tuners:

- Forward measures the forward velocity at full power.

- Strafe measures the strafe velocity at full power.

Forward Velocity Tuner

Purpose

The Forward Velocity Tuner determines the velocity of your robot when moving forward at full power. This value is used for accurate path-following calculations in Pedro Pathing.

Setup and Instructions

- Open the

ForwardVelocityTuner.javaOpMode. - Ensure your robot has enough space to drive 40 inches forward. You can adjust this distance in the FTC Dashboard under the

ForwardVelocityTunerdropdown, but larger distances yield better results. - Run the OpMode.

Output

- Velocity: After the robot has completed the distance, the final velocity will be displayed in telemetry.

- Note: The robot may drift slightly after completing the movement. Ensure the testing area has adequate space.

Inputting the Results

- Open the

FConstantsclass and navigate to thestatic{}block. - Then, on a new line, add

FollowerConstants.xMovement = [OUTPUT], with[OUTPUT]being the velocity output from the tuner.

Note: In Step 2, you only need to make a new line if you are not using the quickstart, otherwise, you can just modify the line that already does this.

Congratulations, you’ve completed the forward velocity tuning!

Strafe Velocity Tuner

Purpose

The Strafe Velocity Tuner determines the velocity of your robot when strafing (moving sideways) at full power. This value is essential for accurate path-following in Pedro Pathing.

Setup and Instructions

- Open the

StrafeVelocityTuner.javaOpMode. - Ensure your robot has enough space to strafe 40 inches to the right. You can adjust this distance in the FTC Dashboard under the

StrafeVelocityTunerdropdown, but larger distances yield better results. - Run the OpMode.

Output

- Velocity: After the robot has completed the distance, the final velocity will be displayed in telemetry.

- Note: The robot may drift slightly after completing the movement. Ensure the testing area has adequate space.

Inputting the Results

- Open the

FConstantsclass and navigate to thestatic{}block. - Then, on a new line, add

FollowerConstants.yMovement = [OUTPUT], with[OUTPUT]being the velocity output from the tuner.

Note: In Step 2, you only need to make a new line if you are not using the quickstart, otherwise, you can just modify the line that already does this.

Congratulations, you’ve completed the strafe velocity tuning!

Zero Power Tuners

You now need to run two zero power acceleration tuners:

- Forward measures the forward zero power acceleration.

- Lateral measures the lateral zero power acceleration.

Forward Zero Power Acceleration Tuner

Purpose

The Forward Zero Power Acceleration Tuner measures how your robot decelerates when moving forward and power is cut from the drivetrain. This value is critical for improving motion accuracy in Pedro Pathing.

Setup and Instructions

- Open the

ForwardZeroPowerAccelerationTuner.javaOpMode. - Ensure your robot has enough space to accelerate to 30 inches/second forward. You can adjust this velocity in the FTC Dashboard under the

ForwardZeroPowerAccelerationTunerdropdown. - Run the OpMode.

Output

- Deceleration: After the robot reaches the target velocity and power is cut, telemetry will display the robot’s deceleration rate.

- Ensure the robot drifts to a complete stop for accurate measurements.

Inputting the Results

- Open the

FConstantsclass and navigate to thestatic{}block. - Then, on a new line, add

FollowerConstants.forwardZeroPowerAcceleration = [OUTPUT], with[OUTPUT]being the deceleration rate output from the tuner.

Note: In Step 2, you only need to make a new line if you are not using the quickstart, otherwise, you can just modify the line that already does this.

Congratulations, you’ve completed the forward zero power acceleration tuning!

Lateral Zero Power Acceleration Tuner

Purpose

The Lateral Zero Power Acceleration Tuner measures how your robot decelerates when strafing (moving sideways) and power is cut from the drivetrain. This value is critical for improving motion accuracy in Pedro Pathing.

Setup and Instructions

- Open the

LateralZeroPowerAccelerationTuner.javaOpMode. - Ensure your robot has enough space to accelerate to 30 inches/second laterally. You can adjust this velocity in the FTC Dashboard under the

LateralZeroPowerAccelerationTunerdropdown. - Run the OpMode.

Output

- Deceleration: After the robot reaches the target velocity and power is cut, telemetry will display the robot’s deceleration rate.

- Ensure the robot drifts to a complete stop for accurate measurements.

Inputting the Results

- Open the

FConstantsclass and navigate to thestatic{}block. - Then, on a new line, add

FollowerConstants.lateralZeroPowerAcceleration = [OUTPUT], with[OUTPUT]being the deceleration rate output from the tuner.

Note: In Step 2, you only need to make a new line if you are not using the quickstart, otherwise, you can just modify the line that already does this.

Congratulations, you’ve completed the lateral zero power acceleration tuning!

Introduction to PID Tuning

Overview

Pedro Pathing relies on Proportional-Integral-Derivative (PID) controllers to ensure precise path-following performance. This guide will walk you through tuning the various PID controllers for translational, heading, drive, and centripetal corrections.

One vs. Two PID Systems

In Pedro Pathing, you can choose between using one or two PID controllers for each correction type:

-

Single PID System (Default):

- A single PID is responsible for managing all errors.

- Recommended for simplicity and most use cases.

-

Two PID System:

- Includes a main PID for handling larger errors and a secondary PID for smaller corrections.

- To enable this system:

- Open

FConstants. - Set any of the following booleans to

trueby adding, in a new line in thestatic{}block:FollowerConstants.useSecondaryTranslationalPID = trueFollowerConstants.useSecondaryHeadingPID = trueFollowerConstants.useSecondaryDrivePID = true

- Tune both the main and secondary PIDs:

- Main PID: Moves the error into the secondary PID’s range without causing overshoot.

- Secondary PID: Corrects small errors quickly and minimizes oscillations.

- Open

Tuning Steps Overview

Each PID has a specific purpose and is tuned independently:

- Translational PID: Maintains the robot on its path.

- Heading PID: Controls rotational alignment.

- Drive PID: Manages braking and acceleration along the path.

- Centripetal Force Correction: Corrects for deviations on curved paths.

Proceed through the following sections to tune each PID.

Tuning the Translational PID

Purpose

The translational PID ensures the robot follows a straight path without lateral deviation. It corrects the robot’s position along an imaginary forward line from its starting position.

Setup

- Open FTC Dashboard and go to the Follower tab.

- Enable

useTranslationaland disable all other checkboxes. - Run the

StraightBackAndForthOpMode. - Ensure the timer for autonomous OpModes is disabled.

Tuning Process

- Push the robot laterally (left or right) to test its correction response.

- Adjust the PID constants (

translationalPIDF) in the FollowerConstants tab of FTC Dashboard.- Goal: Minimize oscillations while maintaining accuracy.

Feedforward Adjustments

If additional feedforward is needed, use translationalPIDFFeedForward for corrections in the robot’s movement direction. Avoid modifying the feedforward term directly in the PIDF.

Testing

Test by pushing the robot with varying force and from different directions. Observe its ability to correct smoothly and accurately without oscillations.

Input Tuned Values

- Open the

FConstantsclass and navigate to thestatic{}block. - Then, on a new line, add

FollowerConstants.translationalPIDFCoefficients = new CustomPIDFCoefficients(P,I,D,F);, withP,I,D, andFbeing the values you tuned for and inputted into FTC Dashboard.

Note: In Step 2, you only need to make a new line if you are not using the quickstart, otherwise, you can just modify the line that already does this.

Secondary PIDF Tuning

If you are going to use the secondary PIDF for the translational error, you can tune the secondary PIDF coefficients by following the same process as the primary PIDF coefficients.

Input them into the FollowerConstants.secondaryTranslationalPIDFCoefficients in the FConstants class the same way that the primary PIDF coefficients are inputted.

Congratulations, you’ve completed the translational PIDF tuning!

Now, move onto the next section to tune your Heading PIDF(s).

Tuning the Heading PID

Purpose

The heading PID ensures the robot maintains its rotational alignment, correcting deviations from its desired heading.

Setup

- Open FTC Dashboard and go to the Follower tab.

- Enable

useHeadingand disable all other checkboxes. - Run the

StraightBackAndForthOpMode. - Ensure the timer for autonomous OpModes is disabled.

Tuning Process

- Rotate the robot manually from one corner and observe its correction response.

- The robot should correct its heading smoothly without moving laterally.

- Adjust the PID constants (

headingPIDF) in the FollowerConstants tab of FTC Dashboard.- Goal: Reduce oscillations while maintaining precise alignment.

Testing

Repeat the tuning process with varying rotation angles and directions to ensure consistent performance.

Input Tuned Values

- Open the

FConstantsclass and navigate to thestatic{}block. - Then, on a new line, add

FollowerConstants.headingPIDFCoefficients = new CustomPIDFCoefficients(P,I,D,F);, withP,I,D, andFbeing the values you tuned for and inputted into FTC Dashboard.

Note: In Step 2, you only need to make a new line if you are not using the quickstart, otherwise, you can just modify the line that already does this.

Secondary PIDF Tuning

If you are going to use the secondary PIDF for heading error, you can tune the secondary PIDF coefficients by following the same process as the primary PIDF coefficients.

Input them into the FollowerConstants.secondaryHeadingPIDFCoefficients in the FConstants class the same way that the primary PIDF coefficients are inputted.

Congratulations, you’ve completed the Heading PIDF tuning!

Now, move onto the next section to tune your Drive PIDF Tuning.

Tuning the Drive PID

Purpose

The drive PID manages acceleration and braking along a path, ensuring smooth motion and minimizing overshoot.

Setup

- Open FTC Dashboard and go to the Follower tab.

- Enable

useDrive,useHeading, anduseTranslational. - Run the

StraightBackAndForthOpMode. - Ensure the timer for autonomous OpModes is disabled.

Zero Power Acceleration Multiplier

- Open the

FConstantsclass and navigate to thestatic{}block. - Set the

zeroPowerAccelerationMultiplieron a new line by addingFollowerConstants.zeroPowerAccelerationMultiplier = [VALUE], where[VALUE]is the value you want to set it to.

Note: In Step 2, you only need to make a new line if you are not using the quickstart, otherwise, you can just modify the line that already does this.

This value determines deceleration speed:

- Higher values: Faster braking but more oscillations.

- Lower values: Slower braking with fewer oscillations.

Tuning Process

-

Adjust the PID constants (

drivePIDF) in the FollowerConstants tab of FTC Dashboard.- Proportional (P): Start with very low values (e.g., hundredths or thousandths).

- Derivative (D): Use small values (e.g., hundred-thousandths or millionths).

- Integral (I): Avoid using this term; it can cause accumulated errors and oscillations.

-

Test the response during braking:

- Reduce oscillations for smoother stops.

Testing

Repeat the tuning process with varying rotation angles and directions to ensure consistent performance.

Input Tuned Values

- Open the

FConstantsclass and navigate to thestatic{}block. - Then, on a new line, add

FollowerConstants.drivePIDFCoefficients = new CustomFilteredPIDFCoefficients(P,I,D,T,F);, withP,I,D,T, andFbeing the values you tuned for and inputted into FTC Dashboard.

Note: In Step 2, you only need to make a new line if you are not using the quickstart, otherwise, you can just modify the line that already does this.

Secondary PIDF Tuning

If you are going to use the secondary PIDF for drive error, you can tune the secondary PIDF coefficients by following the same process as the primary PIDF coefficients.

Input them into the FollowerConstants.secondaryDrivePIDFCoefficients in the FConstants class the same way that the primary PIDF coefficients are inputted.

Kalman Filter Adjustments (Optional)

The drive PID uses a Kalman filter to smooth error responses:

- Model Covariance: Default is

6. - Data Covariance: Default is

1. - Adjust the time constant (default

0.6) to change the weighted average of derivatives.

Feel free to experiment with these settings for optimal performance.

Congratulations, you’ve completed the drive PIDF tuning!

Now, move onto the next section to tune your Centripetal Scaling.

Tuning Centripetal Force Correction

Purpose

The centripetal force correction corrects deviations from curved paths, ensuring the robot maintains an optimal trajectory.

Setup

- Open FTC Dashboard and go to the Follower tab.

- Enable all checkboxes.

- Run the

CurvedBackAndForthOpMode. - Ensure the timer for autonomous OpModes is disabled.

Tuning Process

-

Observe the robot’s path:

- If the robot corrects towards the inside of the curve, increase

centripetalScaling. - If the robot corrects towards the outside of the curve, decrease

centripetalScaling.

- If the robot corrects towards the inside of the curve, increase

-

Adjust the value of

centripetalScalingwithin theFollowerConstantssection in FTC Dashboard.

Testing

Run additional tests with curved paths like CurvedBackAndForth or custom paths. Fine-tune the scaling value for smooth and accurate performance.

Input Tuned Values

- Open the

FConstantsclass and navigate to thestatic{}block. - Then, on a new line, add

FollowerConstants.centripetalScaling = [VALUE];, with [VALUE] being the value you tuned for and inputted into FTC Dashboard.

Note: In Step 2, you only need to make a new line if you are not using the quickstart, otherwise, you can just modify the line that already does this.

Note: The default value for centripetalScaling is 0.0005.

Testing Your Tuned PID Controllers

Purpose

After completing the tuning of all PIDFs and tuning centripetal scaling, it’s important to validate the overall performance of your robot under real-world conditions. This step ensures that your robot follows paths accurately and smoothly.

Recommended Tests

1. Circle

- The Circle test is primarily for fun but can also be used to observe the robot’s handling of continuous curves.

- Look for:

- Smooth and consistent movement along the circular trajectory.

2. Triangle

- The Triangle test evaluates the robot’s ability to navigate sharp turns and straight segments.

- It will drive in a triangular path, starting at the bottom-middle vertex.

3. Custom Paths

- Create custom paths to test the robot’s performance in scenarios closer to match conditions.

- Look for:

- Consistency across different types of paths.

- Smooth transitions between path segments.

Fine-Tuning Tips

- Observe the robot’s performance during each test.

- If you notice any issues (e.g., overshooting, slow corrections, oscillations), revisit the corresponding PID and make small adjustments.

- Alternate between different tests to ensure the robot performs well in diverse scenarios.

Autonomous Building

If you are confused and lost on how to make a Pedro autonomous, check out the Autonomous Building for a step-by-step guide on how to build an autonomous using Pedro.

Documentation and Support

If further improvements are needed or you encounter issues, please join the Pedro Pathing Discord Server to ask questions! Feel free to make an issue on this repo by clicking the edit button at the top right of the page.

Final Thoughts

Congratulations on completing your PIDF tuning and testing! Best of luck to your team this season!

Writing a PedroPathing Autonomous

This guide provides a comprehensive overview of how to create an autonomous routine using PedroPathing. It includes detailed explanations for poses, paths, and logic flow, ensuring clarity and usability.

Step One: Imports

Include the required package and imports for PedroPathing functionalities:

package pedroPathing.examples;

import com.pedropathing.follower.Follower;

import com.pedropathing.localization.Pose;

import com.pedropathing.pathgen.BezierCurve;

import com.pedropathing.pathgen.BezierLine;

import com.pedropathing.pathgen.Path;

import com.pedropathing.pathgen.PathChain;

import com.pedropathing.pathgen.Point;

import com.pedropathing.util.Timer;

import com.qualcomm.robotcore.eventloop.opmode.Autonomous;

import com.qualcomm.robotcore.eventloop.opmode.OpMode;

import pedroPathing.constants.FConstants;

import pedroPathing.constants.LConstants;

Step Two: Pose Initialization

Poses define the robot's position (x, y and heading) on the field. Below are the key poses for this autonomous:

private final Pose startPose = new Pose(9, 111, Math.toRadians(270)); // Starting position

private final Pose scorePose = new Pose(14, 129, Math.toRadians(315)); // Scoring position

private final Pose pickup1Pose = new Pose(37, 121, Math.toRadians(0)); // First sample pickup

private final Pose pickup2Pose = new Pose(43, 130, Math.toRadians(0)); // Second sample pickup

private final Pose pickup3Pose = new Pose(49, 135, Math.toRadians(0)); // Third sample pickup

private final Pose parkPose = new Pose(60, 98, Math.toRadians(90)); // Parking position

private final Pose parkControlPose = new Pose(60, 98, Math.toRadians(90)); // Control point for curved path

Step Three: Path Initialization

The buildPaths method creates all the paths and path chains required for the autonomous routine. Each path connects two or more poses and includes heading interpolation to control the robot's orientation during movement.

Explanation of Path Components:

- BezierLine: Creates a straight path between two points.

- BezierCurve: Creates a curved path using control points.

- Heading Interpolation: Determines how the robot's heading changes along the path:

- Linear: Gradually transitions between start and end headings.

- Constant: Maintains a fixed heading.

- Tangential: Aligns the heading with the path direction.

Path Definitions:

private Path scorePreload, park;

private PathChain grabPickup1, grabPickup2, grabPickup3, scorePickup1, scorePickup2, scorePickup3;

public void buildPaths() {

// Path for scoring preload

scorePreload = new Path(new BezierLine(new Point(startPose), new Point(scorePose)));

scorePreload.setLinearHeadingInterpolation(startPose.getHeading(), scorePose.getHeading());

// Path chains for picking up and scoring samples

grabPickup1 = follower.pathBuilder()

.addPath(new BezierLine(new Point(scorePose), new Point(pickup1Pose)))

.setLinearHeadingInterpolation(scorePose.getHeading(), pickup1Pose.getHeading())

.build();

scorePickup1 = follower.pathBuilder()

.addPath(new BezierLine(new Point(pickup1Pose), new Point(scorePose)))

.setLinearHeadingInterpolation(pickup1Pose.getHeading(), scorePose.getHeading())

.build();

grabPickup2 = follower.pathBuilder()

.addPath(new BezierLine(new Point(scorePose), new Point(pickup2Pose)))

.setLinearHeadingInterpolation(scorePose.getHeading(), pickup2Pose.getHeading())

.build();

scorePickup2 = follower.pathBuilder()

.addPath(new BezierLine(new Point(pickup2Pose), new Point(scorePose)))

.setLinearHeadingInterpolation(pickup2Pose.getHeading(), scorePose.getHeading())

.build();

grabPickup3 = follower.pathBuilder()

.addPath(new BezierLine(new Point(scorePose), new Point(pickup3Pose)))

.setLinearHeadingInterpolation(scorePose.getHeading(), pickup3Pose.getHeading())

.build();

scorePickup3 = follower.pathBuilder()

.addPath(new BezierLine(new Point(pickup3Pose), new Point(scorePose)))

.setLinearHeadingInterpolation(pickup3Pose.getHeading(), scorePose.getHeading())

.build();

// Curved path for parking

park = new Path(new BezierCurve(new Point(scorePose), new Point(parkControlPose), new Point(parkPose)));

park.setLinearHeadingInterpolation(scorePose.getHeading(), parkPose.getHeading());

}

This ensures all paths are ready before the autonomous routine begins, since we call it in init.

Step Four: Managing Path States

The pathState variable tracks the robot's progress. Each state in the autonomousPathUpdate() method corresponds to a specific action or movement. The robot transitions between states when certain conditions are met.

Explanation of Each Case:

public void autonomousPathUpdate() {

switch (pathState) {

case 0: // Move from start to scoring position

follower.followPath(scorePreload);

setPathState(1);

break;

case 1: // Wait until the robot is near the scoring position

if (!follower.isBusy()) {

follower.followPath(grabPickup1, true);

setPathState(2);

}

break;

case 2: // Wait until the robot is near the first sample pickup position

if (!follower.isBusy()) {

follower.followPath(scorePickup1, true);

setPathState(3);

}

break;

case 3: // Wait until the robot returns to the scoring position

if (!follower.isBusy()) {

follower.followPath(grabPickup2, true);

setPathState(4);

}

break;

case 4: // Wait until the robot is near the second sample pickup position

if (!follower.isBusy()) {

follower.followPath(scorePickup2, true);

setPathState(5);

}

break;

case 5: // Wait until the robot returns to the scoring position

if (!follower.isBusy()) {

follower.followPath(grabPickup3, true);

setPathState(6);

}

break;

case 6: // Wait until the robot is near the third sample pickup position

if (!follower.isBusy()) {

follower.followPath(scorePickup3, true);

setPathState(7);

}

break;

case 7: // Wait until the robot returns to the scoring position

if (!follower.isBusy()) {

follower.followPath(park, true);

setPathState(8);

}

break;

case 8: // Wait until the robot is near the parking position

if (!follower.isBusy()) {

setPathState(-1); // End the autonomous routine

}

break;

}

}

public void setPathState(int pState) {

pathState = pState;

pathTimer.resetTimer();

}

Each if statement ensures the robot reaches a specific position (x, y) before proceeding to the next state. This prevents premature transitions and ensures precise execution.

Step Five: Initialization and Loop Methods

The initialization phase sets up paths and timers, while the loop ensures continuous updates during autonomous.

Constants.setConstants(FConstants.class, LConstants.class); ensures that FollowerConstants and the Localizer Constants are updated with the user-defined values.

Note: It is NECESSARY to call Constants.setConstants(FConstants.class, LConstants.class); before initalizing your follower.

@Override

public void init() {

pathTimer = new Timer();

Constants.setConstants(FConstants.class, LConstants.class);

follower = new Follower(hardwareMap);

follower.setStartingPose(startPose);

buildPaths();

}

@Override

public void loop() {

follower.update();

autonomousPathUpdate();

telemetry.addData("Path State", pathState);

telemetry.addData("Position", follower.getPose().toString());

telemetry.update();

}

Example Auto

This is an example auto taken from the Quickstart

It serves as a template for an Into the Deep Bucket Side autonomous, and it scores a neutral preload and then grabs 3 from the spike marks and scores them before parking. It can be viewed here on Github

package pedroPathing.examples;

import com.pedropathing.follower.Follower;

import com.pedropathing.localization.Pose;

import com.pedropathing.pathgen.BezierCurve;

import com.pedropathing.pathgen.BezierLine;

import com.pedropathing.pathgen.Path;

import com.pedropathing.pathgen.PathChain;

import com.pedropathing.pathgen.Point;

import com.pedropathing.util.Constants;

import com.pedropathing.util.Timer;

import com.qualcomm.robotcore.eventloop.opmode.Autonomous;

import com.qualcomm.robotcore.eventloop.opmode.OpMode;

import pedroPathing.constants.FConstants;

import pedroPathing.constants.LConstants;

/**

* This is an example auto that showcases movement and control of two servos autonomously.

* It is a 0+4 (Specimen + Sample) bucket auto. It scores a neutral preload and then pickups 3 samples from the ground and scores them before parking.

* There are examples of different ways to build paths.

* A path progression method has been created and can advance based on time, position, or other factors.

*

* @author Baron Henderson - 20077 The Indubitables

* @version 2.0, 11/28/2024

*/

@Autonomous(name = "Example Auto Blue", group = "Examples")

public class ExampleBucketAuto extends OpMode {

private Follower follower;

private Timer pathTimer, actionTimer, opmodeTimer;

/** This is the variable where we store the state of our auto.

* It is used by the pathUpdate method. */

private int pathState;

/* Create and Define Poses + Paths

* Poses are built with three constructors: x, y, and heading (in Radians).

* Pedro uses 0 - 144 for x and y, with 0, 0 being on the bottom left.

* (For Into the Deep, this would be Blue Observation Zone (0,0) to Red Observation Zone (144,144).)

* Even though Pedro uses a different coordinate system than RR, you can convert any roadrunner pose by adding +72 both the x and y.

* This visualizer is very easy to use to find and create paths/pathchains/poses: <https://pedro-path-generator.vercel.app/>

* Lets assume our robot is 18 by 18 inches

* Lets assume the Robot is facing the human player and we want to score in the bucket */

/** Start Pose of our robot */

private final Pose startPose = new Pose(9, 111, Math.toRadians(270));

/** Scoring Pose of our robot. It is facing the submersible at a -45 degree (315 degree) angle. */

private final Pose scorePose = new Pose(14, 129, Math.toRadians(315));

/** Lowest (First) Sample from the Spike Mark */

private final Pose pickup1Pose = new Pose(37, 121, Math.toRadians(0));

/** Middle (Second) Sample from the Spike Mark */

private final Pose pickup2Pose = new Pose(43, 130, Math.toRadians(0));

/** Highest (Third) Sample from the Spike Mark */

private final Pose pickup3Pose = new Pose(49, 135, Math.toRadians(0));

/** Park Pose for our robot, after we do all of the scoring. */

private final Pose parkPose = new Pose(60, 98, Math.toRadians(90));

/** Park Control Pose for our robot, this is used to manipulate the bezier curve that we will create for the parking.

* The Robot will not go to this pose, it is used a control point for our bezier curve. */

private final Pose parkControlPose = new Pose(60, 98, Math.toRadians(90));

/* These are our Paths and PathChains that we will define in buildPaths() */

private Path scorePreload, park;

private PathChain grabPickup1, grabPickup2, grabPickup3, scorePickup1, scorePickup2, scorePickup3;

/** Build the paths for the auto (adds, for example, constant/linear headings while doing paths)

* It is necessary to do this so that all the paths are built before the auto starts. **/

public void buildPaths() {

/* There are two major types of paths components: BezierCurves and BezierLines.

* * BezierCurves are curved, and require >= 3 points. There are the start and end points, and the control points.

* - Control points manipulate the curve between the start and end points.

* - A good visualizer for this is [this](https://pedro-path-generator.vercel.app/).

* * BezierLines are straight, and require 2 points. There are the start and end points.

* Paths have can have heading interpolation: Constant, Linear, or Tangential

* * Linear heading interpolation:

* - Pedro will slowly change the heading of the robot from the startHeading to the endHeading over the course of the entire path.

* * Constant Heading Interpolation:

* - Pedro will maintain one heading throughout the entire path.

* * Tangential Heading Interpolation:

* - Pedro will follows the angle of the path such that the robot is always driving forward when it follows the path.

* PathChains hold Path(s) within it and are able to hold their end point, meaning that they will holdPoint until another path is followed.

* Here is a explanation of the difference between Paths and PathChains <https://pedropathing.com/commonissues/pathtopathchain.html> */

/* This is our scorePreload path. We are using a BezierLine, which is a straight line. */

scorePreload = new Path(new BezierLine(new Point(startPose), new Point(scorePose)));

scorePreload.setLinearHeadingInterpolation(startPose.getHeading(), scorePose.getHeading());

/* Here is an example for Constant Interpolation

scorePreload.setConstantInterpolation(startPose.getHeading()); */

/* This is our grabPickup1 PathChain. We are using a single path with a BezierLine, which is a straight line. */

grabPickup1 = follower.pathBuilder()

.addPath(new BezierLine(new Point(scorePose), new Point(pickup1Pose)))

.setLinearHeadingInterpolation(scorePose.getHeading(), pickup1Pose.getHeading())

.build();

/* This is our scorePickup1 PathChain. We are using a single path with a BezierLine, which is a straight line. */

scorePickup1 = follower.pathBuilder()

.addPath(new BezierLine(new Point(pickup1Pose), new Point(scorePose)))

.setLinearHeadingInterpolation(pickup1Pose.getHeading(), scorePose.getHeading())

.build();

/* This is our grabPickup2 PathChain. We are using a single path with a BezierLine, which is a straight line. */

grabPickup2 = follower.pathBuilder()

.addPath(new BezierLine(new Point(scorePose), new Point(pickup2Pose)))

.setLinearHeadingInterpolation(scorePose.getHeading(), pickup2Pose.getHeading())

.build();

/* This is our scorePickup2 PathChain. We are using a single path with a BezierLine, which is a straight line. */

scorePickup2 = follower.pathBuilder()

.addPath(new BezierLine(new Point(pickup2Pose), new Point(scorePose)))

.setLinearHeadingInterpolation(pickup2Pose.getHeading(), scorePose.getHeading())

.build();

/* This is our grabPickup3 PathChain. We are using a single path with a BezierLine, which is a straight line. */

grabPickup3 = follower.pathBuilder()

.addPath(new BezierLine(new Point(scorePose), new Point(pickup3Pose)))

.setLinearHeadingInterpolation(scorePose.getHeading(), pickup3Pose.getHeading())

.build();

/* This is our scorePickup3 PathChain. We are using a single path with a BezierLine, which is a straight line. */

scorePickup3 = follower.pathBuilder()

.addPath(new BezierLine(new Point(pickup3Pose), new Point(scorePose)))

.setLinearHeadingInterpolation(pickup3Pose.getHeading(), scorePose.getHeading())

.build();

/* This is our park path. We are using a BezierCurve with 3 points, which is a curved line that is curved based off of the control point */

park = new Path(new BezierCurve(new Point(scorePose), /* Control Point */ new Point(parkControlPose), new Point(parkPose)));

park.setLinearHeadingInterpolation(scorePose.getHeading(), parkPose.getHeading());

}

/** This switch is called continuously and runs the pathing, at certain points, it triggers the action state.

* Everytime the switch changes case, it will reset the timer. (This is because of the setPathState() method)

* The followPath() function sets the follower to run the specific path, but does NOT wait for it to finish before moving on. */

public void autonomousPathUpdate() {

switch (pathState) {

case 0:

follower.followPath(scorePreload);

setPathState(1);

break;

case 1:

/* You could check for

- Follower State: "if(!follower.isBusy() {}"

- Time: "if(pathTimer.getElapsedTimeSeconds() > 1) {}"

- Robot Position: "if(follower.getPose().getX() > 36) {}"

*/

/* This case checks the robot's position and will wait until the robot position is close (1 inch away) from the scorePose's position */

if(!follower.isBusy()) {

/* Score Preload */

/* Since this is a pathChain, we can have Pedro hold the end point while we are grabbing the sample */

follower.followPath(grabPickup1,true);

setPathState(2);

}

break;

case 2:

/* This case checks the robot's position and will wait until the robot position is close (1 inch away) from the pickup1Pose's position */

if(!follower.isBusy()) {

/* Grab Sample */

/* Since this is a pathChain, we can have Pedro hold the end point while we are scoring the sample */

follower.followPath(scorePickup1,true);

setPathState(3);

}

break;

case 3:

/* This case checks the robot's position and will wait until the robot position is close (1 inch away) from the scorePose's position */

if(!follower.isBusy()) {

/* Score Sample */

/* Since this is a pathChain, we can have Pedro hold the end point while we are grabbing the sample */

follower.followPath(grabPickup2,true);

setPathState(4);

}

break;

case 4:

/* This case checks the robot's position and will wait until the robot position is close (1 inch away) from the pickup2Pose's position */

if(!follower.isBusy()) {

/* Grab Sample */

/* Since this is a pathChain, we can have Pedro hold the end point while we are scoring the sample */

follower.followPath(scorePickup2,true);

setPathState(5);

}

break;

case 5:

/* This case checks the robot's position and will wait until the robot position is close (1 inch away) from the scorePose's position */

if(!follower.isBusy()) {

/* Score Sample */

/* Since this is a pathChain, we can have Pedro hold the end point while we are grabbing the sample */

follower.followPath(grabPickup3,true);

setPathState(6);

}

break;

case 6:

/* This case checks the robot's position and will wait until the robot position is close (1 inch away) from the pickup3Pose's position */

if(!follower.isBusy()) {

/* Grab Sample */

/* Since this is a pathChain, we can have Pedro hold the end point while we are scoring the sample */

follower.followPath(scorePickup3, true);

setPathState(7);

}

break;

case 7:

/* This case checks the robot's position and will wait until the robot position is close (1 inch away) from the scorePose's position */

if(!follower.isBusy()) {

/* Score Sample */

/* Since this is a pathChain, we can have Pedro hold the end point while we are parked */

follower.followPath(park,true);

setPathState(8);

}

break;

case 8:

/* This case checks the robot's position and will wait until the robot position is close (1 inch away) from the scorePose's position */

if(!follower.isBusy()) {

/* Level 1 Ascent */

/* Set the state to a Case we won't use or define, so it just stops running an new paths */

setPathState(-1);

}

break;

}